Datasets

In this page you will find information about:

Downloading the iGibson Dataset of Scenes and the BEHAVIOR Dataset of Objects

Downloading the Gibson and Stanford 2D-3D-Semantics Datasets of Scenes

Downloading the Matterport3D Dataset of Scenes

Downloading the iGibson Dataset of Scenes and the BEHAVIOR Dataset of Objects

What will you download?

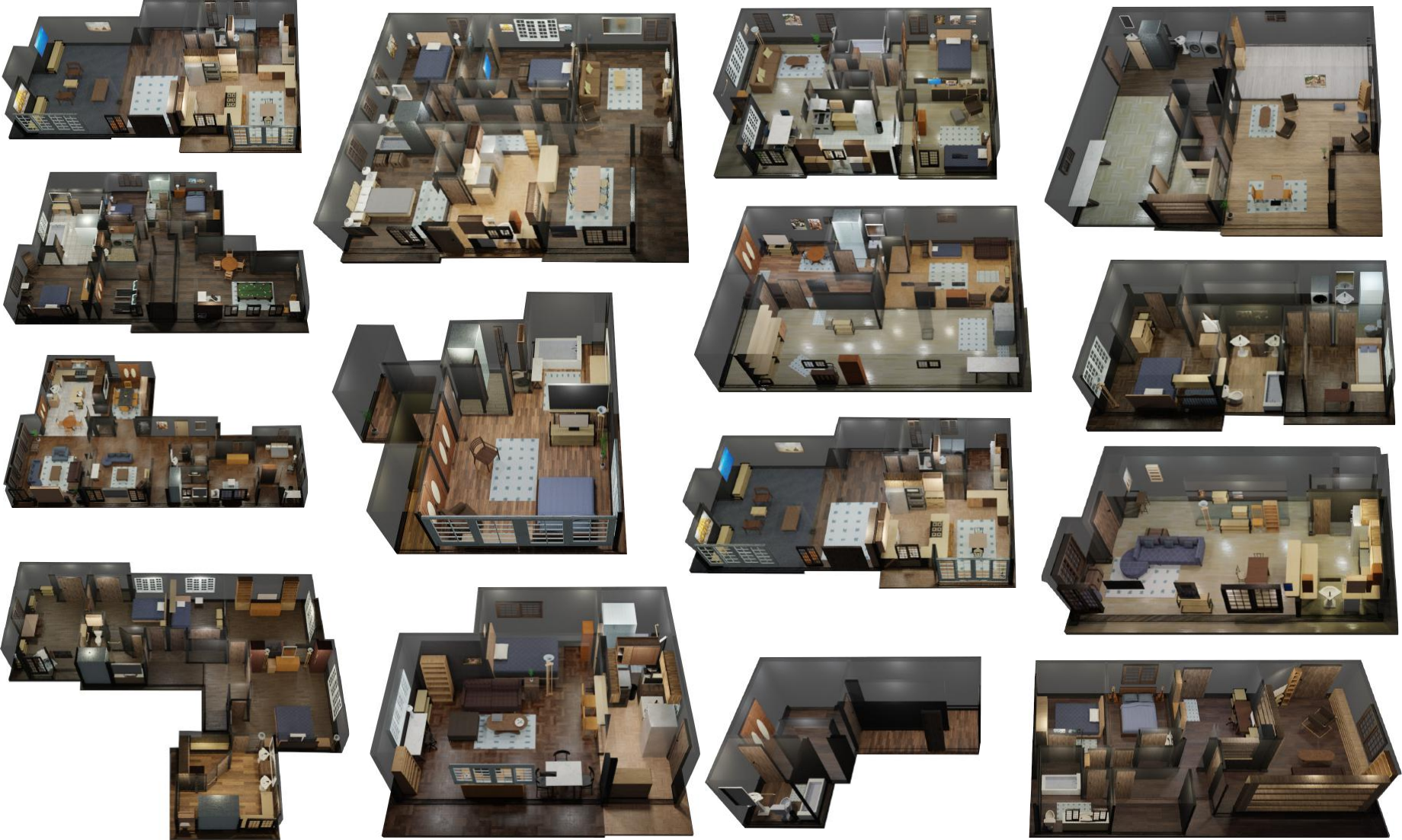

iGibson 1.0 Dataset of Scenes: We annotated fifteen 3D reconstructions of real-world scans and converted them into fully interactive scene models. In this process, we respect the original object-instance layout and object-category distribution. The object models are extended from open-source datasets (ShapeNet Dataset, Motion Dataset, SAPIEN Dataset) enriched with annotations of material and dynamic properties.

iGibson 2.0 Dataset of Scenes: New versions of the fifteen fully interactive scenes, more densely populated with objects.

BEHAVIOR Dataset of Objects: Dataset of object models annotated with physical and semantic properties. The 3D models are free to use within iGibson 2.0 for BEHAVIOR (due to artists’ copyright, models are encrypted and can only to be used within iGibson 2.0).

The following image shows the fifteen fully interactive scenes in the iGibson Dataset:

To download the datasets, follow these steps:

Fill out the license agreement in this form.

After submitting the form, you will receive a key (igibson.key). Copy it into the folder that will contain the dataset, as default:

your_installation_path/igibson/data.Download the datasets from here (size ~20GB).

Decompress the file into the desired folder:

tar -xvf ig_dataset.tar.gz -C your_installation_path/igibson/data.(Optional) You may need to update the config file (

your_installation_path/igibson/global_config.yaml) to reflect the location of theig_datasetby changing the entryig_dataset_pathif you unzip the zip file.

After this process, you will be able to sample and use the scenes and objects in iGibson, for example, to evaluate your embodied AI solutions in the BEHAVIOR benchmark.

A description of the file structure and format of the files in the dataset can be found here.

Cubicasa / 3D Front Dataset Support: We provide support for Cubicasa and 3D Front Dataset providing more than 10000 additional scenes (with less furniture than our fifteen scenes). To import them into iGibson, follow the instructions here.

Downloading the Gibson and Stanford 2D-3D-Semantics Datasets of Scenes

What will you download?

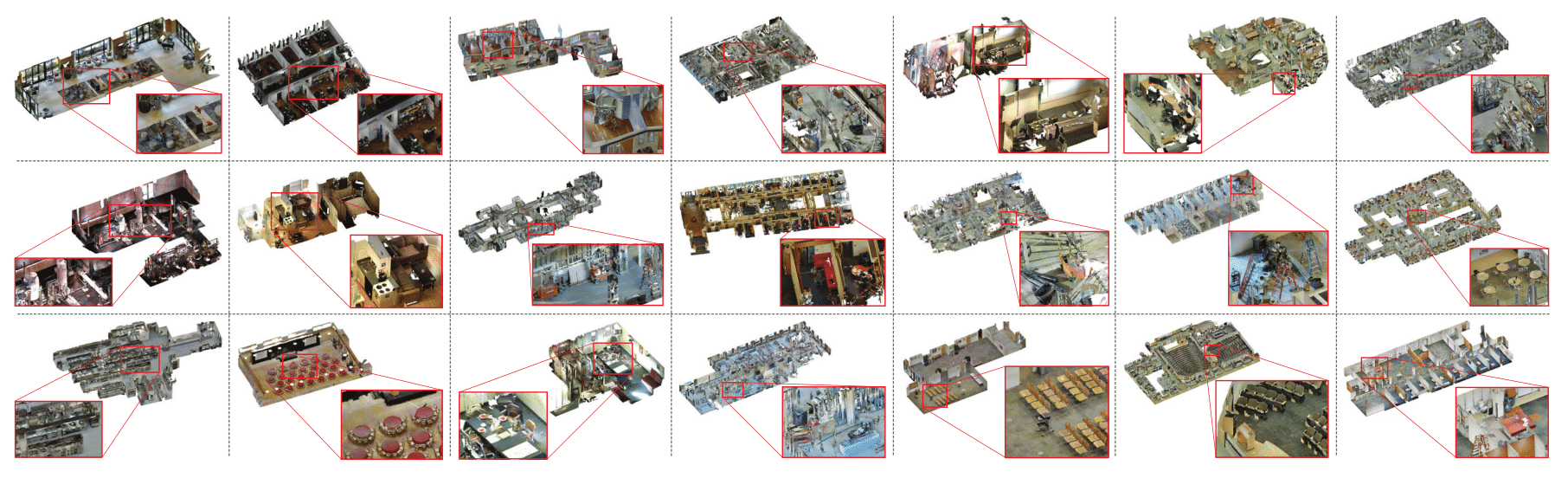

Gibson static scenes: more than 500 reconstructions of homes and offices with a Matterport device. These models keep the texture observed with the sensor, but contain some irregularities, specially with reflective surfaces and thin elements like chairs’ legs.

Stanford 2D-3D-Semantics scenes: 7 reconstructions of Stanford offices annotated with semantic information.

Files included in the dataset:

All scenes, 572 scenes (108GB): gibson_v2_all.tar.gz

4+ partition, 106 scenes, with textures better packed (2.6GB): gibson_v2_4+.tar.gz

Stanford 2D-3D-Semantics, 7 scenes (1.4GB): 2d3ds_for_igibson.zip

We have updated these datasets to be used with iGibson so that users can keep developing and studying pure navigation solutions. The following link will bring you to a license agreement and then to a downloading URL: form

After filling in the agreement, you will obtain a downloading URL.

You can download the data manually and store it in the path set in your_installation_path/igibson/global_config.yaml (default and recommended: g_dataset: your_installation_path/igibson/data/g_dataset).

Alternatively, you can run a single command to download the dataset, decompress, and place it in the correct folder:

python -m igibson.utils.assets_utils --download_dataset URL

The Gibson Environment Dataset consists of 572 models and 1440 floors. We cover a diverse set of models including households, offices, hotels, venues, museums, hospitals, construction sites, etc. A diverse set of visualization of all spaces in Gibson can be seen here. The following image shows some of the environments:

Gibson Dataset Metadata: Each space in the database has some metadata with the following attributes associated with it. The metadata is available in this JSON file.

id # the name of the space, e.g. ""Albertville""

area # total metric area of the building, e.g. "266.125" sq. meters

floor # number of floors in the space, e.g. "4"

navigation_complexity # navigation complexity metric, e.g. "3.737" (see the paper for definition)

room # number of rooms, e.g. "16"

ssa # Specific Surface Area (A measure of clutter), e.g. "1.297" (see the paper for definition)

split_full # if the space is in train/val/test/none split of Full partition

split_full+ # if the space is in train/val/test/none split of Full+ partition

split_medium # if the space is in train/val/test/none split of Medium partition

split_tiny # if the space is in train/val/test/none split of Tiny partition

Floor Number: Total number of floors in each model. We calculate floor numbers using distinctive camera locations. We use

sklearn.cluster.DBSCANto cluster these locations by height and set minimum cluster size to5. This means areas with at least5sweeps are treated as one single floor. This helps us capture small building spaces such as backyard, attics, basements.Area: Total floor area of each model. We calculate total floor area by summing up area of each floor. This is done by sampling point cloud locations based on floor height, and fitting a

scipy.spatial.ConvexHullon sample locations.SSA: Specific surface area. The ratio of inner mesh surface and volume of convex hull of the mesh. This is a measure of clutter in the models: if the inner space is placed with large number of furnitures, objects, etc, the model will have high SSA.

Navigation Complexity: The highest complexity of navigating between arbitrary points within the model. We sample arbitrary point pairs inside the model, and calculate

A∗navigation distance between them.Navigation Complexityis equal toA*distance divide bystraight line distancebetween the two points. We compute the highest navigation complexity for every model. Note that all point pairs are sample within the same floor.Subjective Attributes: We examine each model manually, and note the subjective attributes of them. This includes their furnishing style, house shapes, whether they have long stairs, etc.

Gibson Dataset Format: Each space in the database has its own folder. All the modalities and metadata for each space are contained in that folder.

mesh_z_up.obj # 3d mesh of the environment, it is also associated with an mtl file and a texture file, omitted here

floors.txt # floor height

floor_render_{}.png # top down views of each floor

floor_{}.png # top down views of obstacles for each floor

floor_trav_{}.png # top down views of traversable areas for each floor

For the maps, each pixel represents 0.01m, and the center of the image correspond to (0,0) in the mesh, as well as in the pybullet coordinate system.

Downloading the Matterport3D Dataset of Scenes

What will you download?

Matterport3D Dataset: 90 scenes (3.2GB)

Please fill in this form and send it to matterport3d@googlegroups.com. Please put “use with iGibson simulator” in your email.

You’ll then receive a python script via email in response. Run python download_mp.py --task_data igibson -o . with the received script to download the data (3.2GB). Afterwards, move all the scenes to the path set in your_installation_path/igibson/global_config.yaml (default and recommended: g_dataset: your_installation_path/igibson/data/g_dataset).

Reference: Matterport3D webpage.